AI is Now Emotionally Intelligent. How Can Healthcare Leverage It?

The need for emotional intelligence quotient in healthcare is significantly more, especially among physicians to build trust among patients.

Our emotions are one of the most important characteristics that define what it means to be human. Our emotional intelligence, the ability to identify what others feel, empathize with it, and respond in a certain way are all unique capabilities that define human nature.

Machines, on the other hand, have always been considered emotion-agnostic. Consider conversational technology platforms, such as Alexa. It will understand your command and process your request. But it fails to fully understand sarcasm, humor, disappointment, or any other human emotions.

However, in an era where efforts are on to make machines more and more intelligent, a question always looms large- can AI be emotionally intelligent?

The answer is not far away. Recently, New York-based AI startup Hume AI launched its first voice AI with emotional intelligence which can generate conversations for the emotional well-being of its users.

Its Voice AI trained on a large dataset of human conversations to identify voice tonality, human reflexes, and feeling, can emulate the same across 23 different emotions.

Unlike other AI models, this model is trained on eLLM i.e. Empathetic Large Language Model.

Alan Cowen, Hume AI’s founder sharing this news on LinkedIn wrote that the first AI with emotional intelligence was built to understand the voice beyond words.

“Based on your voice, it can better predict when to speak, what to say, and how to say it," he noted.

But Hume AI is not the only one working to make AI more empathetic. Today, AI models using facial recognition, voice analysis, and behavioral analysis are trying to understand user’s emotions and respond with empathy.

For example, RealEyes deploys facial recognition to identify viewer’s emotions while watching an advertisement.

Cogito, on the other hand, analyzes voice conversations, and its AI promptly supplies agents with on-the-spot feedback regarding customer sentiment and emotional cues.

Thus, while generative AI is disrupting sectors, for people-facing functions Emotionally Intelligent AI is likely to prove more effective, including in healthcare.

(Image Source: Freepik)

AI with EQ More than Physicians?

The requirement of EQ in healthcare is significantly more, especially among physicians to build trust, alleviate concerns, and clear confusion of the patients. It is key for successful patient engagement.

For a long time, medical professionals believed that AI may not be able to emulate the compassion, empathy, and sensitivity a real-world healthcare professional can show. A study conducted in 2023, however, suggests otherwise.

As per the study published in JAMA Internal Medicine, the AI chatbot provided quality and empathetic answers to patient questions. They found physician responses were 41% less empathetic than chatbot responses.

While the study took the medical community by storm, it also highlighted a gap between patient-doctor communication: empathy.

There are multiple reasons why doctors’ interactions with their patients could seem devoid of empathy- negligible impetus on EQ in medical education, clinical burden, lack of time, etc.

In a similar experiment, Google researchers shared that their AI proved to be more accurate and empathetic than real-life physicians.

Twenty actors posing as patients participated in online text-based medical consultations and weren't told whether they were communicating with AI or real-life clinicians.

The study says that the bots outperformed the human physicians in 24 of 26 conversational measures, including showing empathy, being polite, and putting patients at ease.

These studies sparked conversation around the potential of AI in solving medicine’s empathy problem.

(Image Source: Freepik)

What can Emotionally Intelligent AI do?

Post-COVID-19 pandemic, equations in healthcare have changed. While physical consultation is not obsolete, the number of people opting for online consultation has grown worldwide.

For a doctor, spending 15 minutes with a patient on a video call may not fully give an idea of the patient's condition. Emotionally Intelligent AI that can recognize facial expressions, voice tone, and behavior can monitor non-verbal cues in a remote healthcare appointment.

In a physical setting, it can monitor signs of discomfort among patients in the waiting queue and determine who requires urgent care.

Based on the data collected from wearable devices including blood pressure, heart rate it can identify whether the user is distressed, anxious or happy, providing users more insights into triggers that may induce these emotions.

Different medical conditions may also find specific use of emotional AI. For example, it can help autistic people to interpret others’ feelings, and thus improve their social skills.

Similarly, it can alert caregivers on the emotional state of dementia patients by monitoring their facial expressions, speech, and behavioral patterns.

(Image Source: Freepik)

Empathetic AI in Mental Health

The COVID-19 pandemic and the resultant lockdown not only took a toll on our physical health but also our mental health.

A WHO report in 2022 highlighted that in the first year of the COVID-19 pandemic, the global prevalence of anxiety and depression increased by a massive 25%.

Rising loneliness and lack of social interactions with the rising use of social media and the internet have only worsened the situation.

However, emotionally intelligent AI are providing a virtual companion that can understand the emotional state of a user and guide them through it.

Navigating a conversation with someone going through anxiety or depression could be challenging for other humans, because of the emotional toll it may take on the listener or lack of understanding of these issues.

However, AI chatbots could interact with users in a more understanding and empathetic manner, ask questions, alert authorities or users’ loved ones about their condition, and provide the support needed.

The potential of emotional AI applications in the mental health space is massive. The global emotion-AI market is projected to be worth $13.8 billion by 2032.

Emotionally Intelligent AI Apps in Healthcare

One example of emotional AI in the mental health space is Woebot- an AI-powered digital companion that provides therapy through its app.

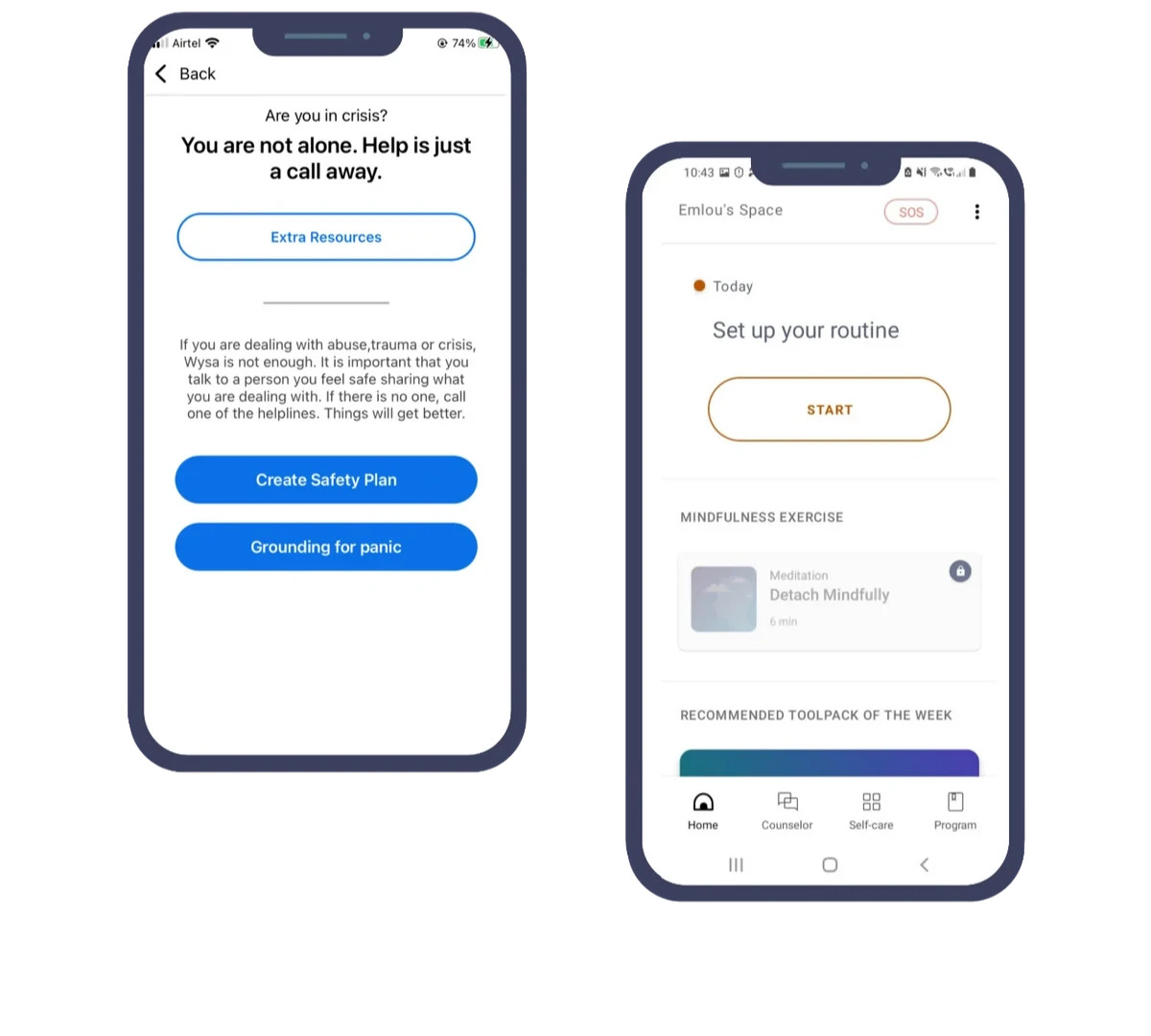

Similarly, made in India Wysa also serves as a text-based online messaging service with a highly trained and qualified mental health and well-being professional.

Massachusetts-based Affectiva was one of the early movers in using AI to analyze user emotions.

One of the big use cases for Affectiva's technology in healthcare is for academic research, including studies on autism, depression, nutrition, mental well-being, mood, stress, and anger management.

Canadian tech firm NuraLogix, on the other hand, developed a technique called Transdermal Optical Imaging that can detect emotions and analyze the impact based on facial blood flow information from the human face detected via smartphone, tablet, or laptop camera.

In 2021, Cognovi Labs, an emotionally intelligent AI firm launched its health platform for decision-makers at biopharma and medical device companies that would help them analyze patient’s emotions and shape content that would trigger specific emotions in them.

How Wysa's Mental Health App using AI works. (Image Source: Wysa)

Challenges & Risks

Every coin has two sides, likewise, the potential of emotionally intelligent AI in healthcare also comes with a disclaimer of risks.

Some fundamental concerns are ethical and philosophical while some relate to privacy.

Concerns have been raised about the accuracy of these AI models in predicting human emotions.

Human emotions are by nature complex. According to critics, the emotional AI models try to oversimplify and decontextualize human emotions. Many researchers have also warned against synthetic empathy.

The risk of bias is another huge concern. Emotions and expressions are subjective and have cultural, and societal contexts to it. A particular gesture may have different meaning in different societies.

For example, a study showed that emotional analysis technology assigns more negative emotions to black men’s faces than white men’s faces. Such biases may lead to inaccurate analysis which could be detrimental in the healthcare space.

Moreover, it opens debate on AI breaching personal boundaries. As emotionally intelligent AI collects and analyzes data such as voice tone, gesture, and facial recognition, it raises questions about how the data is stored and used.

The Way Forward

There’s no doubt that AI Models are as good as the data they’re trained on. These models are already trained on a huge dataset. Additionally, they are also learning in real-time through their interaction with users.

However, explicit consent must be taken for the use of Emotional AI. For example, in some cases, over-dependency and attachment to AI-assisted chatbots and virtual companions have been flagged to be harmful for user’s mental and emotional well-being.

Thus, maintaining transparency, making users aware of the consequences, giving them choices regarding data they want to divulge, and obtaining their explicit consent becomes important.

The debate around Emotionally Intelligent or Empathetic AI leaves several questions open-ended. Machines are not capable of feeling any emotions, but they can simulate them.

So, while emotionally intelligent AI may not be able to replace human connections, their regulated use can drive positive patient outcomes.

Stay tuned for more such updates on Digital Health News